Modernising in AWS: Elastic Beanstalk to Fargate

For many teams new to the AWS Cloud, AWS Elastic Beanstalk can be an easy way in.

It’s great for rapidly prototyping certain types of applications, and to help you fast-track your product development, particularly if you’re a young startup. It can quickly stand up web applications end-to-end, which is useful for demonstrating new features in sprint reviews or for producing working wireframes for your next client pitch. This is most likely what got you into it in the first place. No surprises there.

But maybe things are getting quite serious now; you’re facing into exponential uplifts in traffic, juggling competing priorities for reliability and performance, and cost optimisation (among other things). Perhaps your hairline is making a rapid retreat to warmer climes at the thought of it all. It’s what business administrators might call an ‘inflection point’. Major change is afoot, and you need to quickly adapt in order to survive it.

Unfortunately, while Beanstalk can be a useful tool for small projects and teams – at least initially – it doesn’t provide the level of customisation and granular controls required by high-performing organisations. ECS + Fargate could be just the answer you’re looking for.

In this blog we’ll take a look at AWS ECS and Fargate, and how modernising can address some of the challenges above.

Containers in AWS

We assume that this is reasonably new territory for you, so let’s introduce the AWS services that support container-based operations.

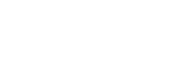

AWS offers three managed services for deploying Docker containers in the Cloud:

- AWS Elastic Beanstalk (EB) with Single Container Docker

- Amazon Elastic Container Service (ECS) with AWS Fargate

- Amazon Elastic Container Service for Kubernetes (EKS)

(Three ways to run Docker on AWS)

Elastic Beanstalk

As inferred by the title of this blog, we assume you are already acquainted with Beanstalk, and are expecting to migrate away from it. We’ll keep this section fairly brief, therefore, as we know how precious your time is.

Basic Usage

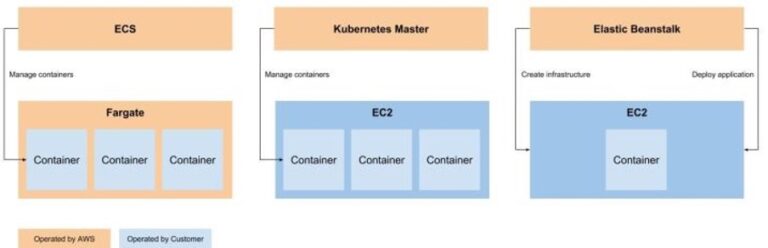

As you probably know, you can simply upload your code, and have Elastic Beanstalk automatically handle the deployment; from capacity provisioning, load balancing and auto-scaling, to application health monitoring.

You retain full control over the underlying AWS resources powering your application, and can access them at any time. Once your application is deployed, you can manage your environment and release new application versions using the pipelines provided by Beanstalk.

(Elastic Beanstalk Workflow)

Benefits

- Automatically launches environments by creating and configuring the AWS resources needed to run your code.

- Removes any infrastructure or resource configuration work on your part.

- Creates and manages deployment pipelines for you.

- Supports applications developed in Java, .NET, PHP, Node.js, Python, Ruby and Go.

- Supports Docker on familiar servers such as Apache, Nginx, Passenger, and IIS.

Drawbacks

Unreliable Deployments

- When the deployment fails there is no notification and further deployments fail as well.

- Solutions such as terminating the instance that had deployment issue and recovering Elastic Beanstalk won’t work.

- If a machine recovers then also won’t be able to find the fault. Confusion as to whether the machine is healthy or not.

Deployment Speeds

- Deployments can be slow, it can take five minutes at least, and sometimes stretch to fifteen, for a site with just two front-ends. With more servers, deployments could take even longer.

Stack Upgrades

- Elastic Beanstalk comes out with new stack versions all the time but fails to inform what’s changed.

Defaults

- Environment builds typically use the default VPC and a Classic Load Balancer.

Amazon ECS

If you’re unfamiliar with ECS, then welcome to CaaS (Containers as a Service) in AWS.

Amazon Elastic Container Service (ECS) is an AWS-managed service, designed to make it easy to run containerised applications in the Cloud. You can easily deploy containers to host a simple website or run complex, distributed microservices, comprising thousands of containers, without having to constantly fret about how those containers are distributed.

ECS Components

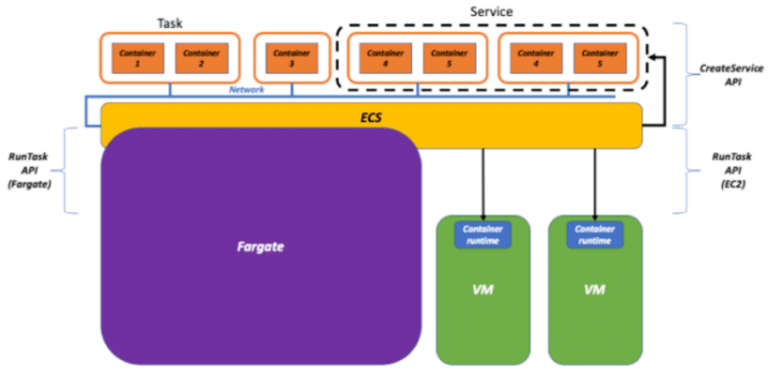

Task Definitions

Analogous to Docker files (docker-compose files); they act as blueprints for how a container should be configured, such as what image to use, how much memory and CPU to reserve, how log streams should be processed and what network ports to open. Container-to-container networking is declared here too, to support common microservice patterns like sidecar containers, for example.

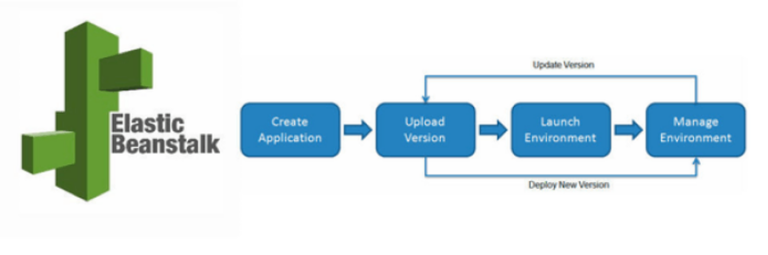

Tasks

A task is one or more containers that are to be scheduled together by ECS.

Clusters

An Amazon ECS cluster is a logical grouping of tasks or services. If you are running tasks or services that use the EC2 launch type, a cluster is also a grouping of container instances. If you are using capacity providers (such as Fargate), then a cluster is also a logical grouping of capacity providers.

Services

A service is like an Auto Scaling group for tasks. It defines the quantity of tasks to run across the cluster, where they should be running (for example, across multiple Availability Zones), automatically associates them with a load balancer, and horizontally scales them based on metrics that you define like CPI or memory utilisation.

ECS+EC2

The first flavour of ECS involves self-managing a fleet of EC2 servers, upon which ECS will deploy and manage your containers automatically.

This used to be the only supported ECS model, before the advent of serverless compute. As you can imagine, or have perhaps experienced, having to manage the underlying hosts is not free from certain burdens, and serves to distract you away from customer and product focused activities. It also often results in under-utilised EC2s driving up your bill. Not ideal, then.

Under the hood

Working with ECS in this way involves installing an agent on each container host, so that it can interact with the ECS control plane. With that agent in place on the EC2s, the control plane can then automatically orchestrate container deployments, based on the Docker images and configuration you will declare in your Task Definitions.

(Image Source: AWS Amazon)

AWS makes the process of deploying ECS-ready EC2s pretty straightforward, by providing an optimised Amazon Machine Image (AMI), within which the ECS agent is pre-installed. The AMI launches automatically from the ECS console, or you can action this from the CLI.

Immediate Benefits over Elastic Beanstalk

- One of the biggest advantages is the flexibility to change underlying EC2 instances. As the traffic or demand increases, you can change EC2 instances however you see fit.

- Amazon ECS APIs are succinct and reliable; you can start and stop containers with a single POST request.

- API. A simple portal can control every container, which helps non-technical (sales, marketing) to do, for example a demo, without keeping the solutions up for the entire time frame.

Limitations

Capacity constraints

It is up to you to choose the appropriate instance types, sizes, and quantity for your cluster fleet (the EC2s). You should provision enough EC2 capacity to support scaling your container workloads to meet any projected loads, as well as to spread them across enough failure domains (e.g. availability zones) to support high availability, if that’s needed.

Features like EC2 Auto Scaling groups can take some of the heavy-lifting out of this process. However, EC2s do not horizontally scale very quickly, which leads us back to having to deploy over-provisioned (or under-utilised) EC2s to cater for spikey or unpredictable traffic.

Cluster constraints

At the compute layer, each cluster follows a set list of rules for auto-scaling, and automatically provisions the same a single type of EC2 instance (because it is AMI-derived).

Some containers within the cluster may imply a different auto-scaling requirement at the compute later, but this is simply not supported by ECS+EC2. It cannot adjust the instance type automatically, which is what leads to over-provisioned, under-utilised EC2s.

Load-balancing constraints

Also, sometimes several containers in the same task need their own load balancer. This is not possible with ECS+EC2.

Maintenance

Despite AWS providing Amazon Linux and Windows AMIs pre-configured for ECS, the Shared Responsibility Model means that you will be responsible for the majority of the maintenance and security of your infrastructure. This is one of the major drawbacks of classic ECS.

Ongoing maintenance will include:

- EC2 Operating System

- Docker

- ECS agent

- Container images

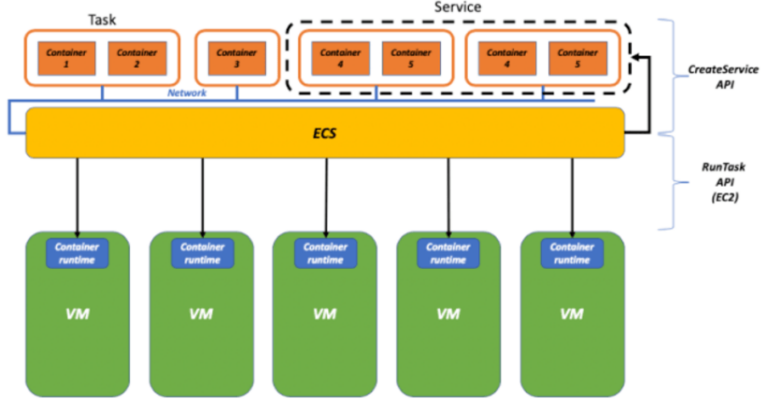

Fargate

So, now that we know that ECS gives you more direct control over underlying deployments, than Elastic Beanstalk, how can we sidestep that annoying problem of having to manage the EC2s, their heavy maintenance and their ever-changing capacity requirements?

This is exactly where Fargate comes in.

It’s Serverless

(Image Source: AWS Amazon)

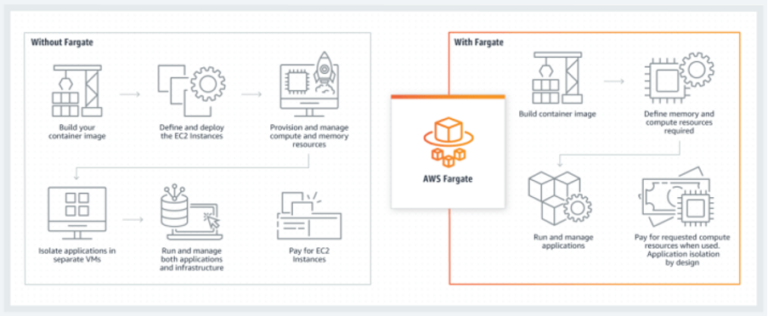

The obvious answer to not having to manage servers, is to get rid of them altogether. Of course, servers must exist somewhere (data centres, for example), but by removing server-related maintenance and security from your own list of responsibilities, you can simplify and streamline your business, and de-risk at the same time.

Fargate is fully serverless, so no more server maintenance for you!

Compared to Beanstalk & ECS+EC2

Before Fargate was born, ECS+EC2 was used to provide detailed control over container environments, while Elastic Beanstalk was used to abstract those details and just run the containers for you.

The problem, for many, was that the two were each at opposite ends of the container spectrum; ECS was flexible, but too maintenance heavy, whilst Beanstalk was pretty inflexible but relatively maintenance free. ECS+Fargate was invented to answer this problem, by sitting squarely in the middle of that spectrum.

With Fargate you do not need to patch, scale, monitor virtual machines anymore. Imagine Fargate as a flexible and production-grade Platform-as-a-Service offering for Docker containers.

As a fully-managed service, Fargate places the vast majority of maintenance responsibility on AWS’s shoulders. That in turn provides you with a quick and easy-to-use, readily scalable compute engine, available on an on-demand (OpEx) basis; you just pay for what you need, when you need it, no strings attached.

You also don’t have to manage multi-layered access rules anymore. You can fine tune the permissions between your containers like you would do between single EC2 instances.

Deploying Your First Fargate Containers

Migrating to Fargate is really easy: you simply create a new cluster, choose the Fargate engine instead of EC2, specify an image to deploy and the amount of CPU and memory you need. Fargate will handle all of the updating and securing of the underlying Linux OS, Docker daemon, and ECS agent, as well as all the infrastructure capacity management and scaling, for you. You can deploy a working PoC in minutes. Sounds a breeze, right? That’s because it is.

Advantages

- Less complexity

- AWS-managed security

- AWS-managed maintenance

- Focus on your customers, not yourselves

- Reduced costs (vs over-provisioned EC2s) – only pay for containers when they’re running

- Ideal for tasks with peaks in Memory and/or CPU usage, as it scales faster than EC2s.

Disadvantages

- Less customisable than EC2

If you have special requirements for governance, risk management, and compliance that require absolute control of the compute later, then Fargate may fall outside this requirement. Carefully consider if Fargate is really for you. - Higher Costs (Maybe)

To subsidise all that managed maintenance we mentioned above, Amazon charges Fargate users a higher per-hour fee than EC2-backed solutions. Check the pricing against your baseline compute and resource requirements, and determine if the trade-offs fit your business model. In addition, running your container workloads in the Cloud will likely be more expensive than operating your own infrastructure on-premises. What you gain in ease of use, you lose in flexibility and performance. - Regional Availability

AWS Fargate is slowly rolling out across Amazon’s Cloud data centers, but it’s not yet available in all regions. Check the AWS website for up-to-date regional support.

Conclusion

As you have seen, ECS is relatively simple to start using. The hardest part, for most people, is getting a docker image setup for the first time, which falls outside the responsibility of ECS.

By now you’ve hopefully learned that Fargate is even faster and easier to set up, and that its serverless compute engine will save you from a large, burdensome maintenance overhead – such as placement of resources, scheduling, scaling, and patching.

Fargate also integrates well with the rest of the AWS platform, which will ensure that future expansion be well-supported.

Costs are more flexible than before, and you can also purchase Savings Plans (a similar concept to EC2 Reserved Instances), to make significant savings for long-lived Fargate deployments – 12 and 36 month savings plans are available.

Consult an Expert

If you would like to learn more about any of the topics mentioned in this article, or would prefer to have somebody else do the work for you, then engaging with an experienced and verified AWS consulting partner is one of the best ways to progress.

Here at Ubertas Consulting, we offer expert advice on all manner of container-based greenfield and legacy work, plus many other AWS-related topics. We routinely work with all manner of different clients, large and small, cross-industry.

Come and speak to us – we’d love to help.

Adrian Stacey

Senior AWS Solutions Architect at Ubertas Consulting