Modernising in AWS: Monoliths to Microservices Using AWS App Mesh

In the latest entry to our Modernisation Blog series, Solutions Architect Jim Wood performs a high-level comparison of monoliths vs microservices, before exploring some of the ways in which App Mesh makes managing microservices easier. Let’s get started!

Monoliths

Unless you’ve been living in the dark ages, chances are you already know what a monolith is. You’ve more than likely owned or managed some before, and perhaps you still are. You will know the trouble they bring with them, which is probably what led you to find this article.

Even so, let’s quickly set the scene and remind ourselves about them.

Typical Anatomy

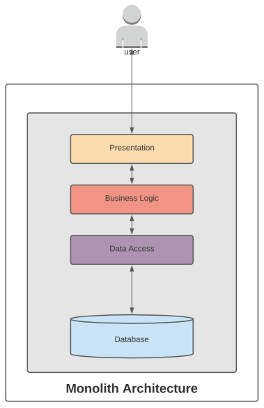

A typical monolith might consist of a classic ‘3-tier’ web application architecture, such as the one below:

If you’ve come from an ASP/.NET, Java or PHP background, you will likely be familiar with this pattern. You will also quite likely have deployed variations on the theme, such as use of the MVC (Model, View, Controller) paradigm, for example.

In a classic monolith, all functional areas of the application are in some way conjoined, whether that be: UI, input validation, data processing, business logic, error handling, etc.

Until fairly recently, monoliths used to be the favoured option for enterprise web applications, and this is why development frameworks adopted patterns like MVC – in order to provide at least some separation-of-concerns. However, as anyone with experience of using these frameworks will know, they do not solve the problem of managing at scale for very long. Monolith projects tend to arrive in a situation where increasing bloat and technical debt begin to cripple business agility, along with growing maintenance costs. Confidence is lost.

Pros

- A single codebase/application to understand

- Can be managed and understood by one team

- Logging/debugging and monitoring is simple (only one running application to monitor)

- Single database (one source of truth for everything)

Cons

- Scalability limited

- Vertical-only scaling

- Horizontal scaling impossible (application is stateful; processes are managed internally)

- Releases are cumbersome and dangerous (‘big-bang’ releases)

- Very long build time

- Even a tiny change will result in the entire application being released

- Prolonged testing required

- Rollbacks are expensive and/or slow

- Very long build time

- Fault intolerant

- If the application drops service, the entire application drops service

- Databases become large and complex; harder to maintain; more expensive to scale; restores take longer, which hinders Disaster Recovery

- Under-utilised resources – to make up for lack of horizontal scaling – result in high fixed costs

- TCO is high

- Speed-to-market is slow

Microservices

In contrast to the monolith, a microservice is exactly as its name implies: a tiny, single area of functionality, running as a standalone service. A single developer can build a new microservice very quickly (hours) and easily, using the right tools and frameworks. They can deploy it quickly too, as each microservice can have its own CICD pipeline.

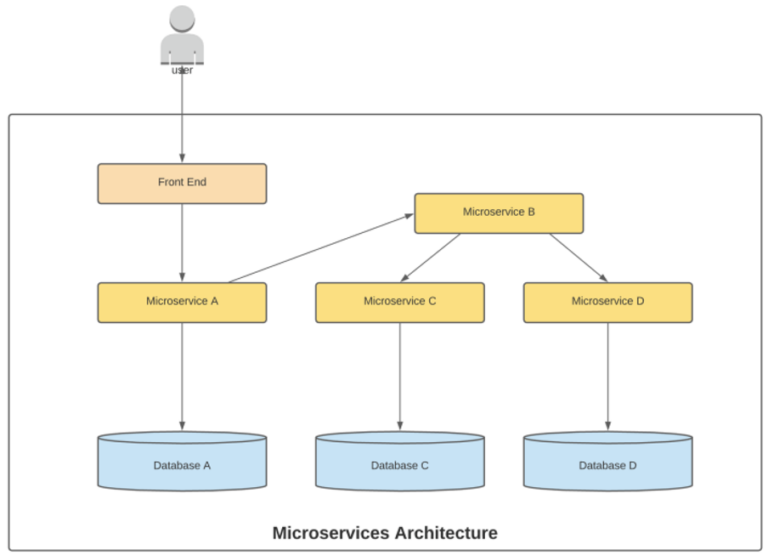

Here is how some parts of a monolithic application may look after migrating to microservices:

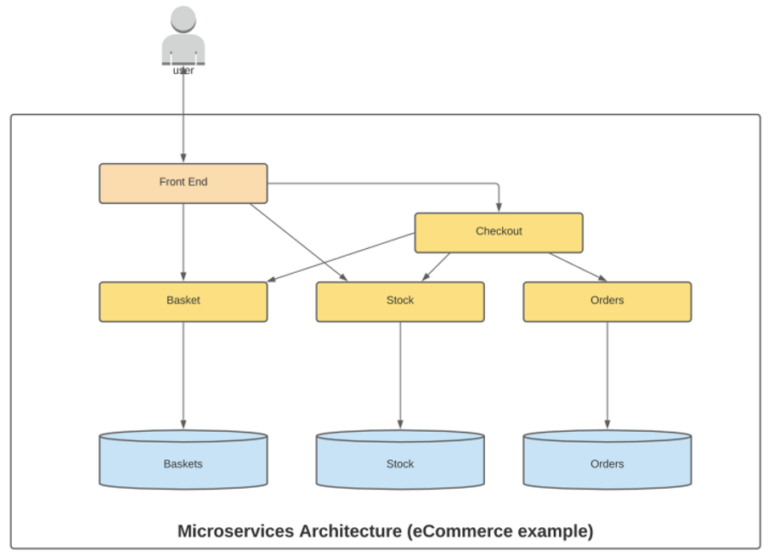

And here is how a real-world example of a microservice architecture might look, if you worked in eCommerce:

Microservice architectures have helped countless businesses – at varying scale – to become increasingly more agile in responding to changing market conditions, as well as having better control of their spend, often converting from rigid Capital Expenditure cost models, to more flexible monthly Operational Expenditure cost models.

Pros

- Easier for developers to build and deploy new features

- Quicker to develop

- Functional requirements are more concise and isolated

- Quicker to build

- Testing is isolated

- Service-specific tests

- Containers can be run locally and in isolation

- Release to a single service, not the entire application (e.g. ‘Domain Driven Design’)

- Speed-to-market improved

- Product-based development (not project-based)

- Respond quicker to changing customer demands

- Respond quicker to competitors’ capabilities (and beat them)

- Increased portability of application/services

- Containers make application code platform agnostic

- Fault tolerant

- Service containers are managed, and will self-heal or horizontally scale when needed

- ECS Service

- Auto-scaling

- One, or even several, services failing will not necessarily mean the entire user-facing application will fail (reduced blast radius).

- Service containers are managed, and will self-heal or horizontally scale when needed

- Quicker to develop

Cons

- Harder to monitor (multiple services to monitor – number will grow as functionality and scaling increases)

- End-to-end visibility/tracing is exponentially harder as number of services (and service containers) increases.

- Security risk surface increases

- More resources to secure

- More network communication / access points

Microservices on AWS

A typical microservice may be built using very lightweight, microservice-specific development frameworks. It is then encapsulated in a Docker container, which is sort of an abstraction of a process container (think of it as a miniature VM, but without the strings attached).

Docker containers are highly portable, as they are abstracted away from the underlying OS. In other words, I can write a microservice and run it in a standalone container on my local development environment on my Mac. The developer next to me, on his PC, can run the exact same container – not a Windows port of the container, but the exact same container. That same container can be run on any other system with a running Docker host, completely agnostically.

This is a powerful tool for:

- Portability

- Ease of development (and collaboration)

- Testing

- Dev-to-production parity

- Future proofing

AWS Container Services

AWS provides several managed services that take most of the heavy lifting out of building, deploying and maintaining microservice architectures in the Cloud:

Microservices on AWS (+ App Mesh)

AWS App Mesh aims to solve some of the most common pitfalls of managing microservice architectures, by reinterpreting pre-existing design patterns and tooling.

App Mesh Origins

Before getting stuck in to how the AWS App Mesh service works, it’s important to understand some fundamentals, such as:

- Why it exists – (the problems it was designed to solve)

- How it compares to pre-existing solutions & patterns (e.g. service mesh)

An introduction to the ‘Service Mesh’ pattern

Before AWS App Mesh, came the Service Mesh pattern.

This might be a term you’ve heard before, particularly if you’ve had some exposure to managing large-scale Kubernetes clusters, for example. If you haven’t heard of it before, or if you’re just unsure of exactly what is it, then this section is definitely for you.

Overview

A ‘service mesh’ is basically a dedicated layer of infrastructure that is used for service-to-service communication. This brings a range of benefits, including:

- Improved security

- Better observability of service-to-service communications

- Increased granular control of fault-tolerance

- Automating retries

- Back-off/roll-off policies

- Centralised management of the application ecosystem

Data Plane

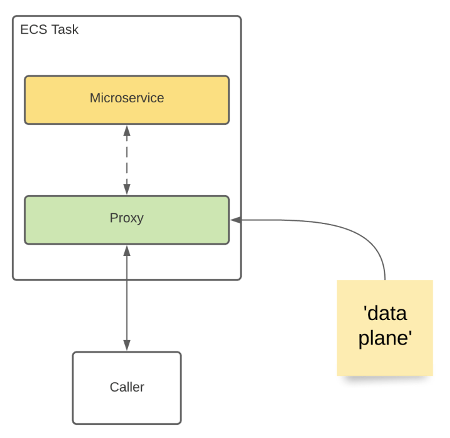

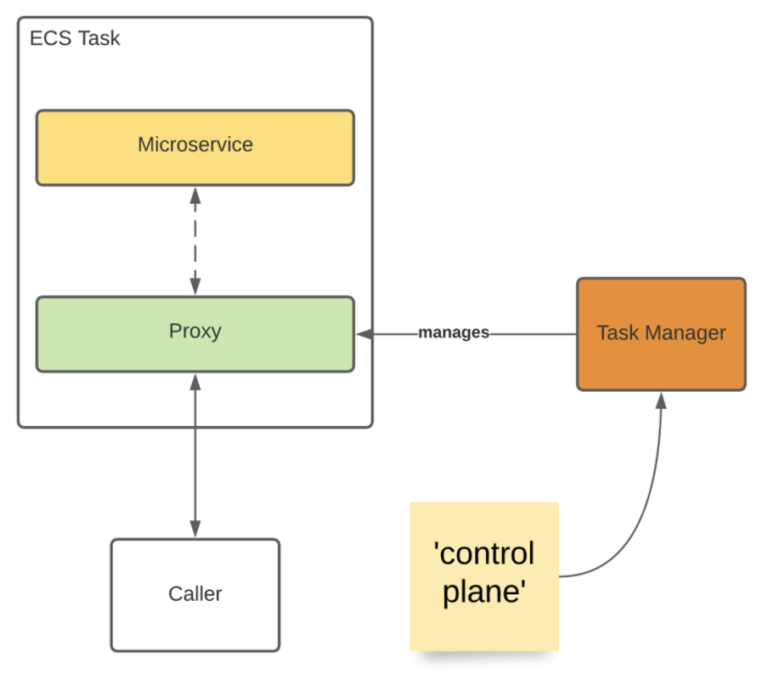

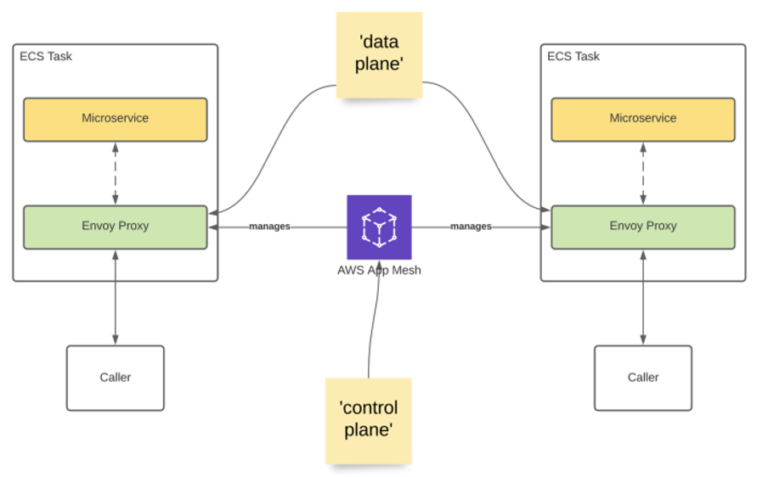

In its absolute simplest form, a Service Mesh starts with the idea of simply placing network proxies before each running service in our microservice architecture – either using a sidecar container in ECS or an App Mesh controller in Kubernetes.

This is called the ‘data plane’.

The data plane intercepts calls between different services and processes them, in the same way that any other proxy might work. In the diagram above, the ‘caller’ will typically be another service in our microservice architecture.

Control Plane

The data plane proxies are then managed by a separate, automated management process, called the ‘Control Plane’.

Not only does the control plane coordinate the behaviour of proxies, it also provides APIs for ops teams to control and observe the whole network.

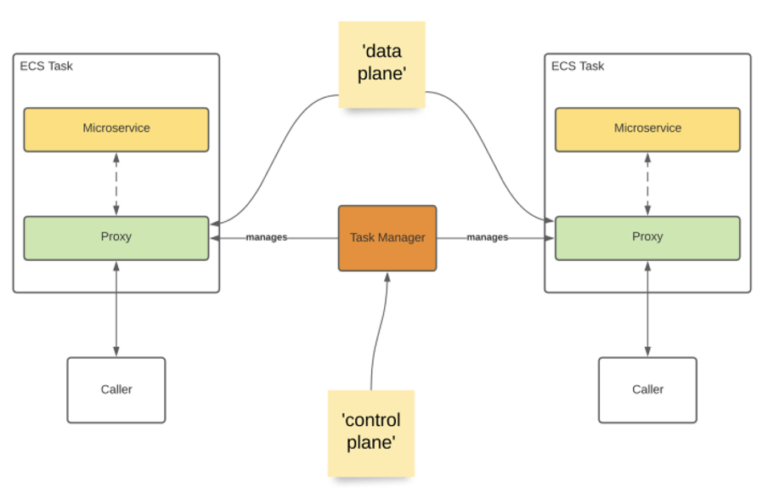

Putting it all together

In the diagram below, you can see how a deliberately small and simple microservice ecosystem is managed.

Imagine the benefits this could bring at scale, with tens, hundreds or even thousands of distributed microservices.

Imagine also, the limitations of our self-managed Task Manager (Control Plane) at such a scale.

Mapping AWS AppMesh to the Service Mesh Pattern

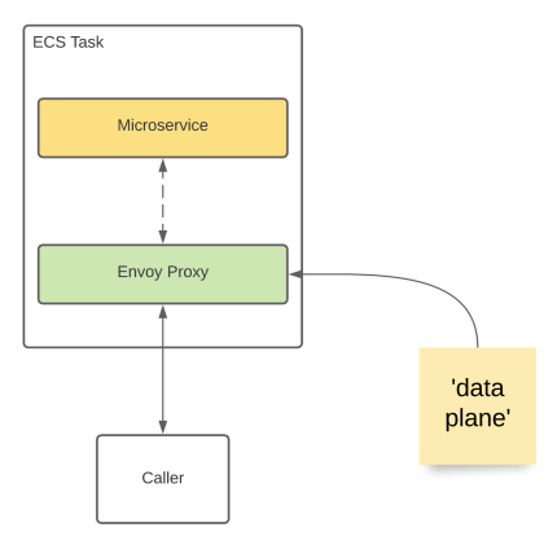

Data Plane

AWS App Mesh uses the open source Envoy Proxy to provide its ‘Data Plane’.

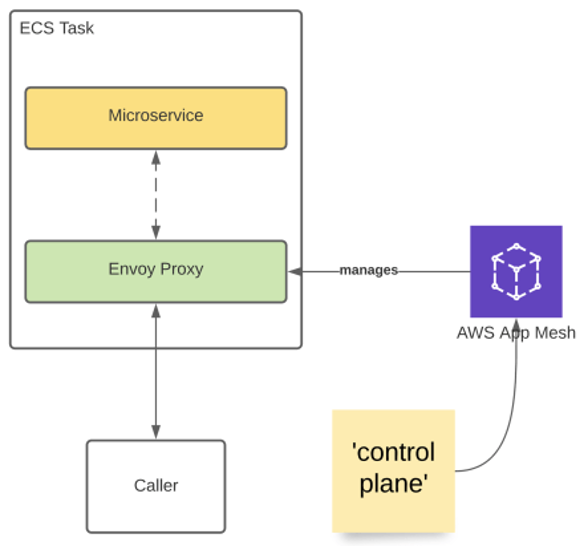

Control Plane

AWS App Mesh is a fully-managed ‘Control Plane’ service, that supports ECS (Fargate), EKS (Kubernetes) and EC2.

Putting it all together

As you can see, the high-level topology looks similar to before. However, we now no longer need to manage the Control Plane ourselves, which negates the need to continually upgrade, patch, scale and secure it. More of our precious time can be spent focussing on our customers’ needs, rather than our own.

As with other AWS services, App Mesh is a highly-available, always-on service – you pay for what you use on a month-by-month (OpEx) basis.

It integrates seamlessly with other AWS services, too.

Centralised Tracing & Monitoring

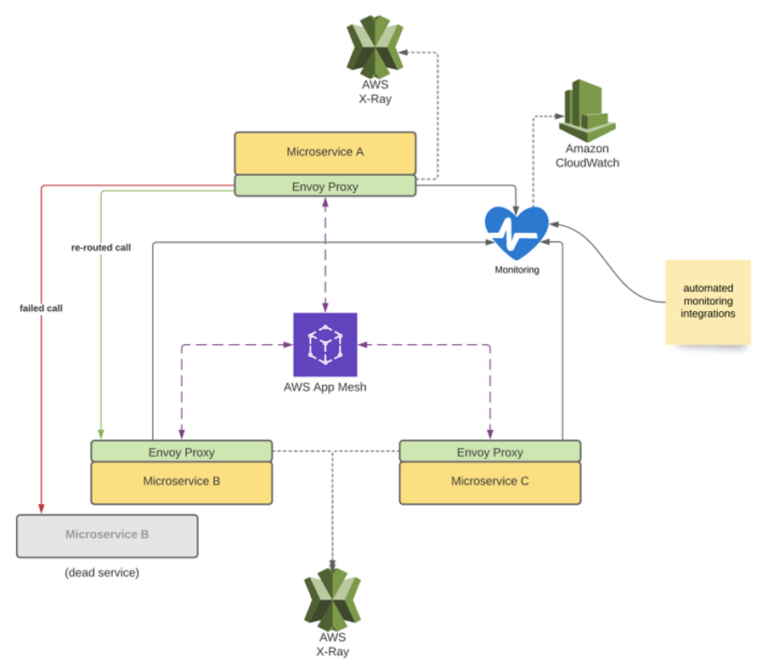

With App Mesh and Envoy Proxy in place, it is now much easier to ensure that all services are properly traced and monitored, as both these integrations will be managed for us.

In the diagram below, AWS X-Ray is being used to provide full end-to-end tracing, whilst CloudWatch could be used for centralised logging, monitoring and container insights, for instance. See too, how calls to unhealthy endpoints are rapidly re-routed to healthy ones by Envoy Proxy (an example of App Mesh’s automatic self-healing and service discovery capabilities).

Conclusion

We hope you enjoyed this whistle stop tour of the microservice evolution, and that it provides some stimulus for further debate with your colleagues. Perhaps you can now build a small POC or run a lab session, and begin exploring more features of App Mesh. It’s only a sprint away!

Alternatively, if you would like to learn more from a real human being (remember what they look like?) feel free to reach out to us Ubertas Consulting. We are a team of highly skilled, experienced and certified consultants – as well as being an AWS Trusted Partner – who can offer tailored advice for all manner of AWS implementations, migrations and modernisations. We’d love to help.

Jim Wood

Solutions Architect at Ubertas Consulting